Refresh

Well, that was fast

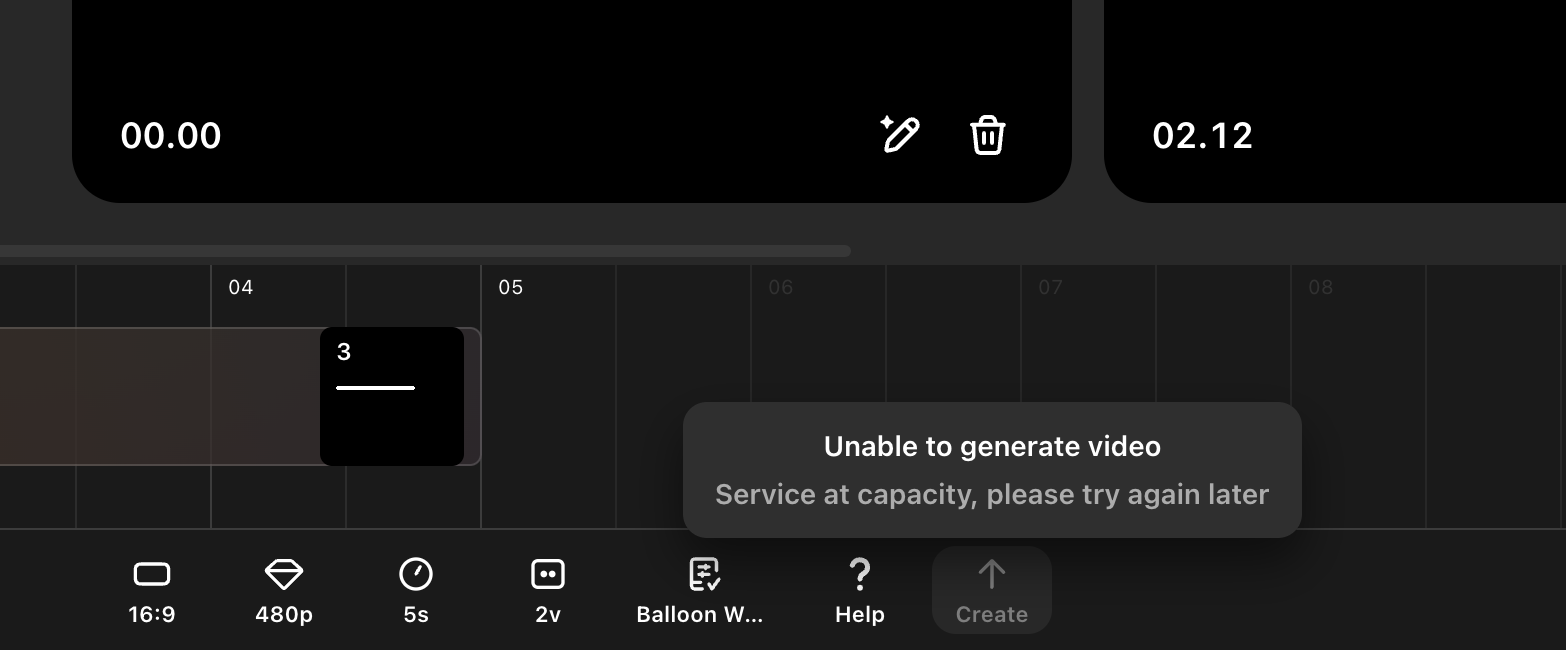

Guess I wasn’t the only one with the idea to hop on the Sora train. As of this moment, the system is at capacity, and while some features in the Sora interface, like storyboarding still work, it can’t generate any new AI video because the system is at capacity.

It may take a while for things to calm down – it is Day 1, after all – but when they do, you’ll want to give Sora a spin. Trust me.

This is too easy

Editor at Large Lance Ulanoff here.

As soon as Sam Altman and company stopped speaking, I hopped onto Sora. First, I had to find it: it’s at Sora.com, and then I needed to log in. My corporate account didn’t offer support for Sora, but my personal ChatGPT account, which is ChatGPT Plus for now, did. Unlike other ChatGPT models, Sora is a stand-alone desktop app.

I verified my age (new, I think, for OpenAI, I think), and then I found myself inside Sora, which starts with a grid of other people’s AI video creations.

I didn’t really look around and instead started typing in the prompt field where I asked for a middle-aged man building a rocketship by the ocean., I described a tranquil scene with the moon, the ocean lapping nearby, a campfire, and a friendly dog. I didn’t touch the default settings: 5 secs, 480p, and instead selected the up arrow to generate my first video.

It only took a minute or so for a pair of options to appear. One was a 5-second clip of a dog that started with a tail for its head. The other 5-second video got the dog right but also had the man building a small rocketship model.

I’m currently awaiting a remix (I didn’t ask for a “strong remix”) that imagines a full-sized rocket. this second pass, which is possible on all Sora video creations, is taking a lot longer. Even so. Wow.

And that about wraps about the showcase for Sora. On the subject of availability, Sam Altman noted that Sora is available today in the United States and other countries, but as of now, OpenAI doesn’t know when it will launch in Europe and the UK.

In terms of generations, if you have a ChatGPT Plus account, you get 50 generations a month, while a ChatGPT Pro account basically allows unlimited generations.

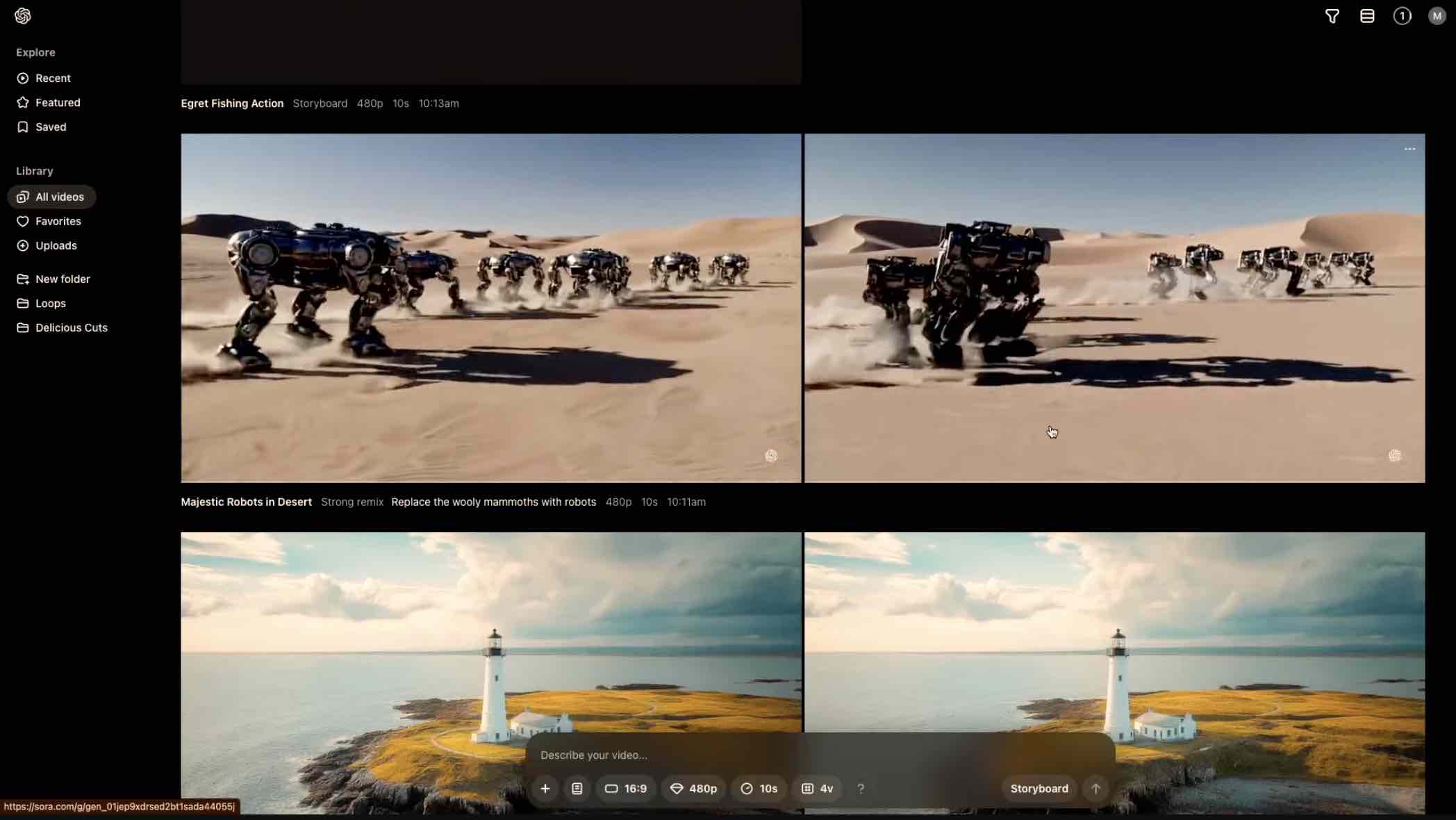

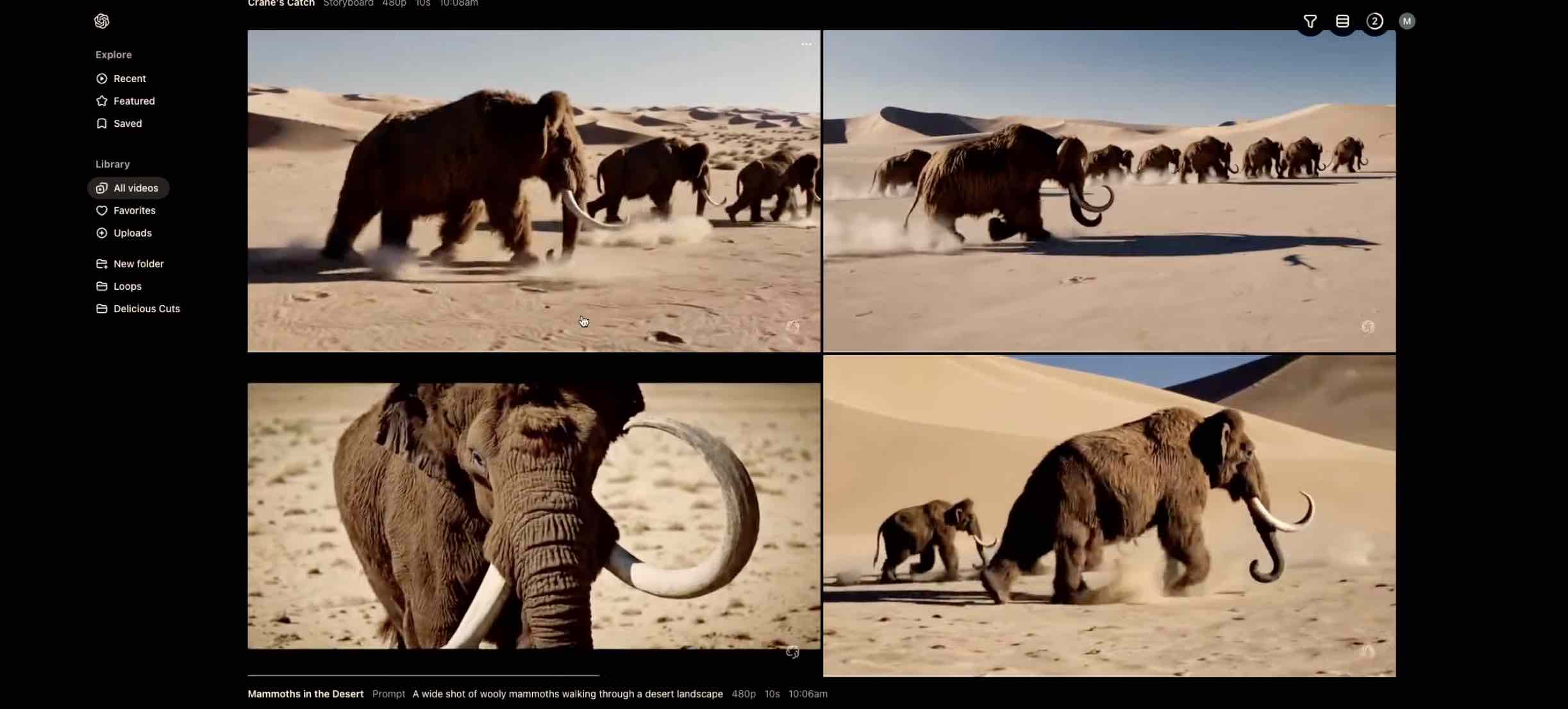

Of course, OpenAI showed off Sora’s remix functionality and swapped wooly mammoths for robots, and the results are certainly something. It wasn’t instantaneous like the original generation, but it did take a few minutes.

Now, the OpenAI team is demoing its first video generation, with Sam Altman providing the prompt, “Woolly mammoth walking through the desert.” Beyond the prompt, you can choose a resolution—480p to 1080p –, length, and aspect ratio.

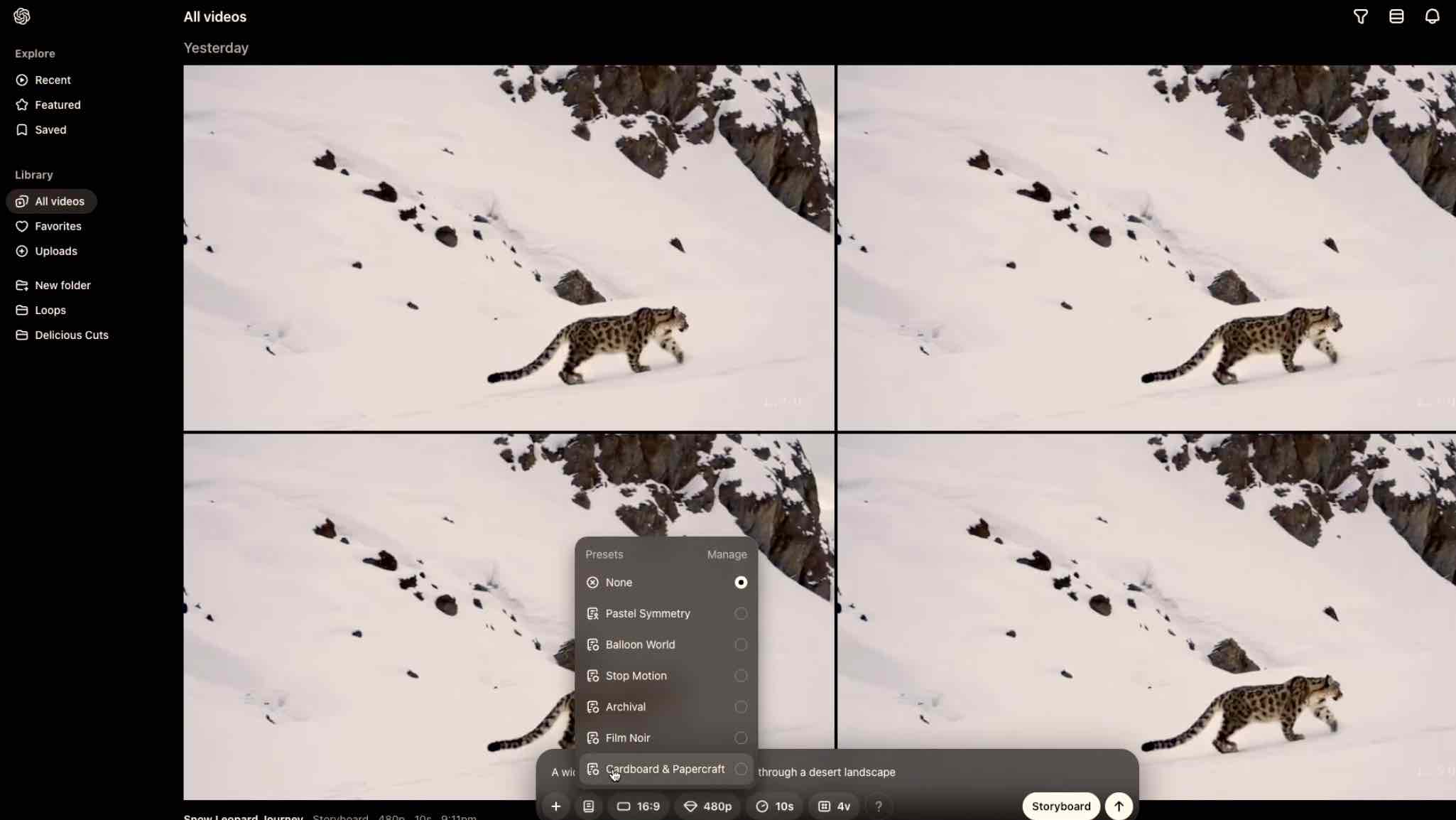

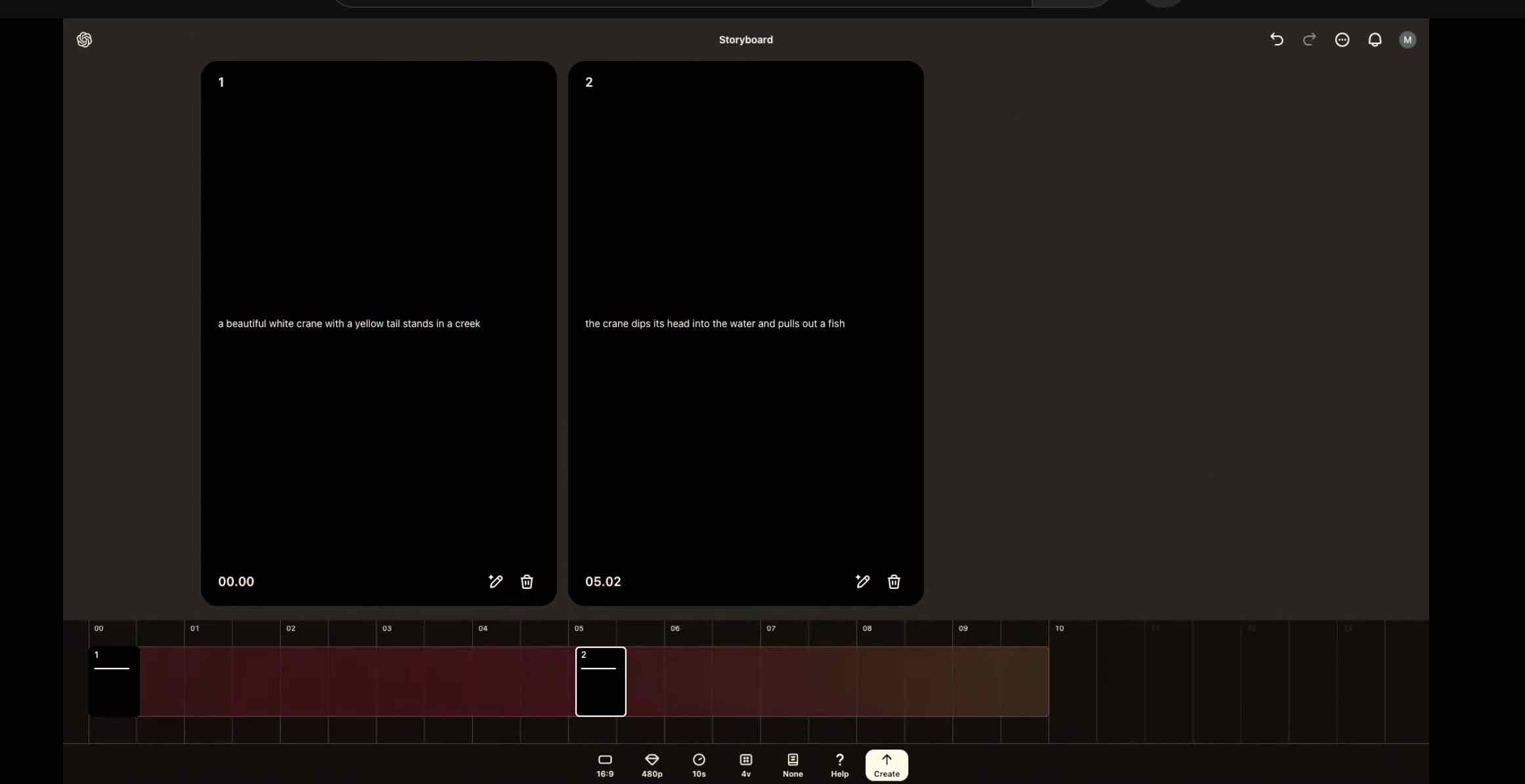

As we wait for that video to be generated, OpenAI is showing off a new “Storyboard” feature, which looks much like a standard timeline. Except it’s one where you can drag cards and put in the text describing what you’d like it to generate.

Maybe even cooler, though, is that you can use Storyboard to create a video from an image you uploaded; in the example, OpenAI uploaded a photo of a lighthouse to a card and then can add text to generate it in a specific way.

And here’s the moment we were all waiting for – a look at what Sora created through the prompt “Woolly mammoth walking through the desert,” which generated four different versions. If it’s not what you wanted to see, you can remix it.

OpenAI’s day 3 stream is live, and Chief Executive Officer Sam Altman wasted no time confirming that Sora is here. It’s being launched in the United States and “most countries” outside today and will be available on Sora.com for those with a ChatGPT Plus account. Additionally, Sora Turbo is coming today with additional features, including faster processor times.

After a brief introduction about Sora and the versions arriving and the broader strokes of video being key to OpenAI, we’re now getting a demo of Sora and its interface. Via the “Featured” tab under ‘Explore’ in the interface, you can scroll through a plethora of videos made in Sora, but also clicking on the individual video reveals more details about how the video was created.

Day 3 of the 12 Days of OpenAI is about to kick off, and it’s safe to say that we’re expecting Sora, OpenAI’s text to video generator, to be the main topic, especially after Marques Brownlee posted a full review earlier this morning.

Just like with Days 1 and 2, OpenAI is streaming it on its YouTube channel, and a countdown has already begun.

As if we needed any more hints that Sora, OpenAI’s text to video generation tool, will be the Day 3 mystery announcement as part of 12 Days of OpenAI, Marques Brownlee just posted a video review of Sora on his YouTube channel.

Brownlee has been using Sora for a week and calls it, “a powerful tool that’s about to be in the hands of millions of people”. He calls the results he’s achieved with Sora both “horrifying and inspiring at the same time”.

As well as the quality of the video Sora can produce, we also get to see some of the cool features of Sora, like the Remix button, which lets you make some slight adjustments to a video you’ve just generated without having to type the whole prompt in again. Plus there’s a Storyboard editor, for stringing together prompts into a timeline.

All in all, from this review I’d say that Sora is looking very promising!

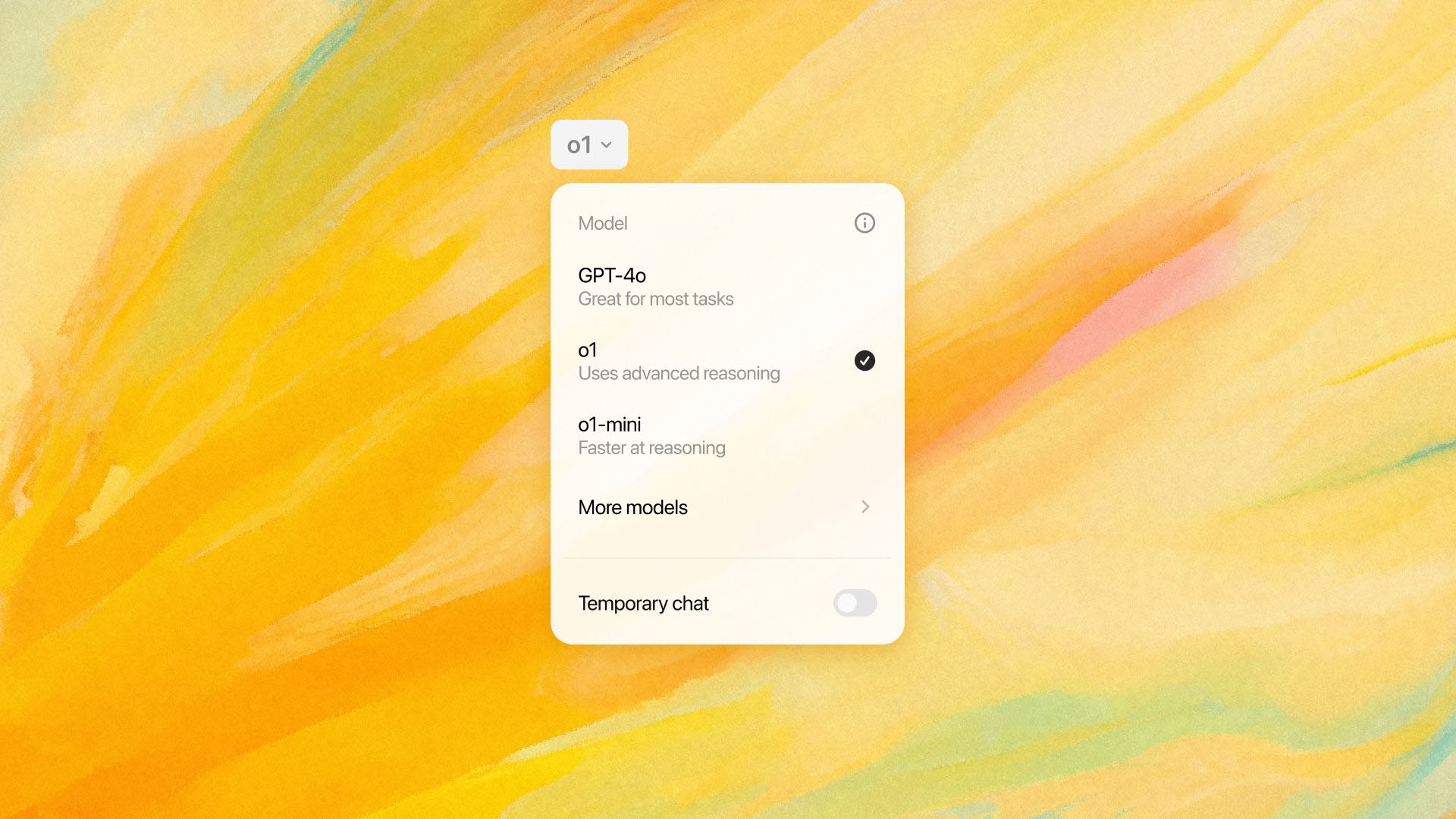

Circling back to Thursday’s announcement of ChatGPT o1, the new model which OpenAI released, along with a faster o1-mini. o1 is better at reasoning and considers things for longer before responding. This makes it better at coding, math and writing.

Remember, you can only get access to o1 if you are a Plus or Teams subscriber. Free tier users of ChatGPT stay on ChatGPT-4. Enterprise and Edu users will have access to o1 by Thursday this week.

The release of the o1 model makes us think that any further LLM announcements from OpenAI will be unlikely in the coming days, especially of the long-rumored ChatGPT-5.

We can’t wait to see what today’s announcement is, not long to go now! If it’s not going to be a new LLM then we’d love the see something in the AI image space, or improvements to ChatGPT search, not to mention the long-awaited Sora.

OpenAI o1 is now out of preview in ChatGPT.What’s changed since the preview? A faster, more powerful reasoning model that’s better at coding, math & writing.o1 now also supports image uploads, allowing it to apply reasoning to visuals for more detailed & useful responses. pic.twitter.com/hrLiID3MhJDecember 5, 2024

Hotly tipped for release, potentially today, is augmenting ChatGPT Advanced Voice Mode with the ability to use your device’s video camera as an input, so ChatGPT could ‘see’ you and use that to perform its queeries. You could use it to ask ChatGPT how you’re looking today, and get some feedback, for example. Yes, prepare to be judged by AI.

In a demo of the technology OpenAI has already showed us how ChatGPT could be used to roleplay a video interview, giving feedback on how well the person was doing:

A recent tweet by X user akshay suggests that screen sharing could be another input source for ChatGPT, so you could share your screen with the AI and get it to comment on what you’re looking at.

Looks like AVM with video and Screen Share is coming today #OpenAI #Day3 pic.twitter.com/5jah9thpP4December 9, 2024

After recent comments made by OpenAI’s Chad Nelson at his recent C21Media keynote in London it looks like we’re definitely going to see the release of OpenAI’s long-awaited video generation app Sora as part of the 12 Days of OpenAI. A video has surfaced showing a demo of “Sora V2”, (which begs the question, what happened to version 1?)

According to comments made by Nelson in the keynote, the new V2 of Sora will feature 1-minute video output, which can be generated by text to video, text and image to video or text and video to video.

See the latest incredible Sora footage in this tweet by Ruud van der Linden:

Sora v2 release is impending:* 1-minute video outputs* text-to-video* text+image-to-video* text+video-to-videoOpenAI’s Chad Nelson showed this at the C21Media Keynote in London. And he said we will see it very very soon, as @sama has foreshadowed. pic.twitter.com/xZiDaydoDVDecember 7, 2024

While you’re waiting for the next announcement from OpenAI’s 12 Days of OpenAI, due at 10am PT today, perhaps you’d like to read a guide to using ChatGPT with Siri? With iOS 18.2 on the verge of being released, the next Apple update will give iPhone 16 and iPhone 15 Pro users access to OpenAI’s ChatGPT via Siri for the first time.

If you’re expecting to see GPT-5 as part of the “12 days of OpenAI”, I’ve got bad news for you, kind of.

Just last month, OpenAI CEO, Sam Altman, said during a Reddit AMA (Ask me anything), “We have some very good releases coming later this year! Nothing that we are going to call GPT-5, though.”

Well, later this year has arrived, so maybe we will see a new ChatGPT model over the next few days but don’t expect it to have a 5 in its name.

It sounds like OpenAI CEO Sam Altman is as disappointed as we are that there will be no new 12 Days of OpenAI announcements until Monday. He has tweeted to say he can’t wait until he can share more news with us and that, “Monday feels so far away”.

i am so, so excited for what we have to launch on day 3. monday feels so far away.December 7, 2024

If you’ve been wondering if 12 Days of OpenAI would continue over the weekend – on Saturday, December 7, and Sunday, December 8, respectively – the company isn’t leaving us hanging.

OpenAI is pausing its 12-day daily announcements until Monday, December 9, writing “Continuing Monday at 10AM PT” on its homepage. This certainly gives us all the weekend to ponder what else is in store. Hopefully, next week, we will see the official reveal of Sora or at least some more details about it.

The first two days were busy with the launch of the o1 reasoning model, a new Pro tier, and a deep dive into reinforcement tuning as demoed on the latest model.

While Sam Altman was not on today’s Day 2 presentation of Open AI’s 12 days of announcements, the executive did take to X (formerly Twitter) to shed some more light on reinforcement finetuning – including a promised public rollout of early 2025 in quarter one.

today we are announcing reinforcement finetuning, which makes it really easy to create expert models in specific domains with very little training data.livestream going now: https://t.co/ABHFV8NiKcalpha program starting now, launching publicly in q1December 6, 2024

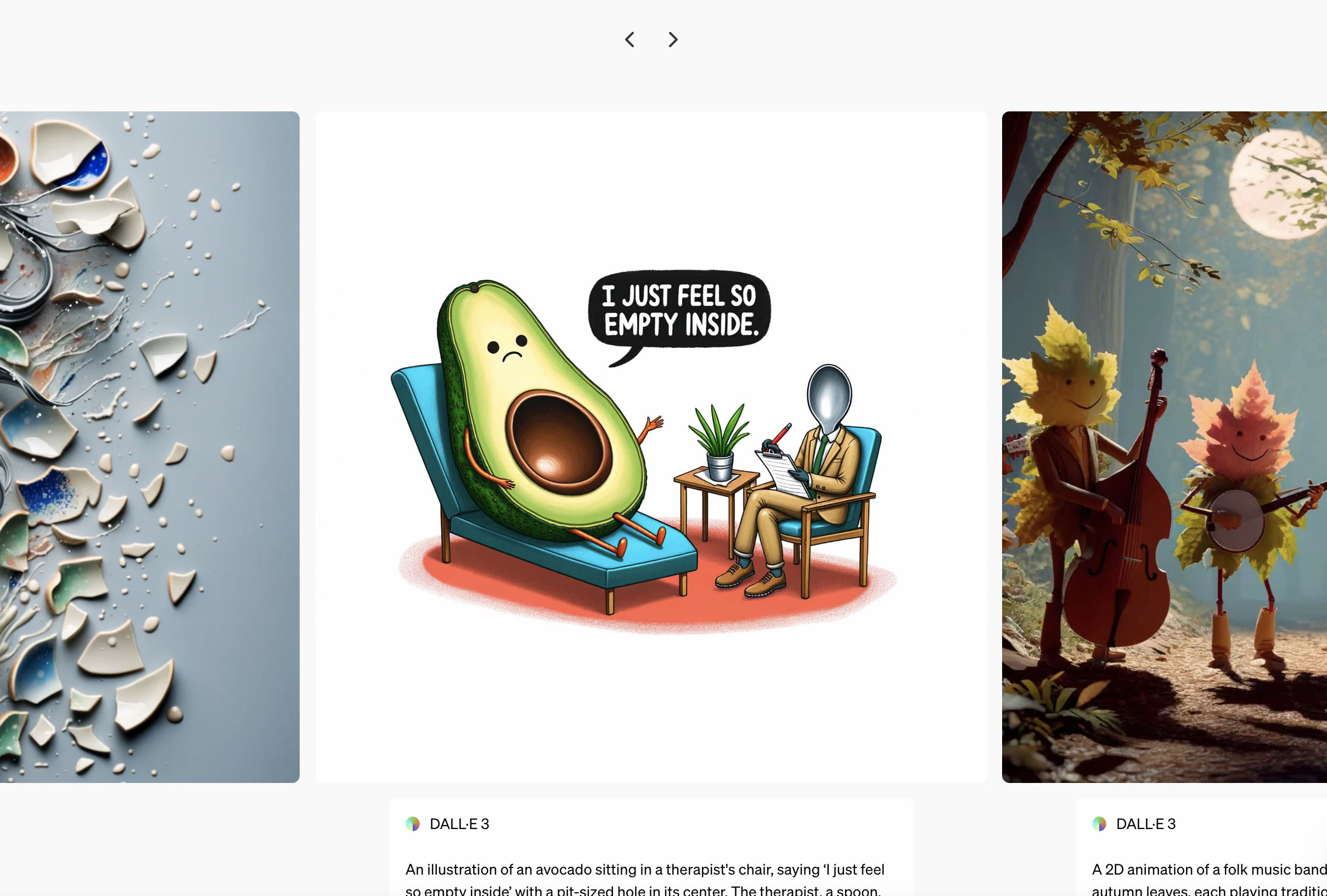

Of course, OpenAI had to bring us back down to earth with another joke, though this time, we didn’t get to hear Sam Altman laugh at it, though. With Christmas fast approaching and the business being based in San Fransisco – home to many a self-driving car – it’s pretty on point.

The joke went along the lines of: We live in San Fransisco, self-driving vehicles are all the rage, and Santa’s been trying to get in on this. He’s been trying to make a self driving sleigh, but it keeps hitting trees left and right. Any guesses? He didn’t pine-tune his models.

To help you better visualize it, TechRadar’s Editor-at-Large Lance Ulanoff asked ChatGPT to create an image of it.

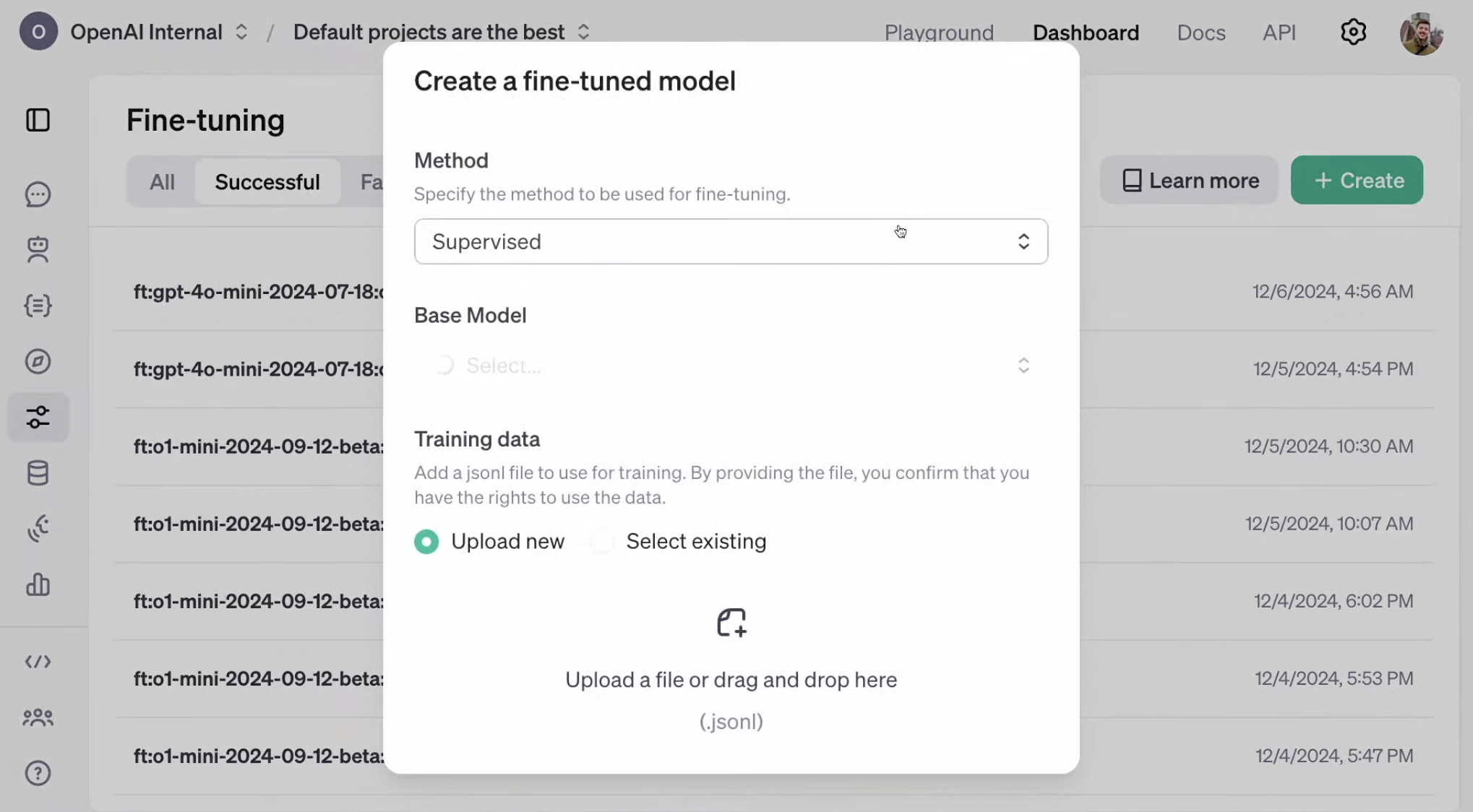

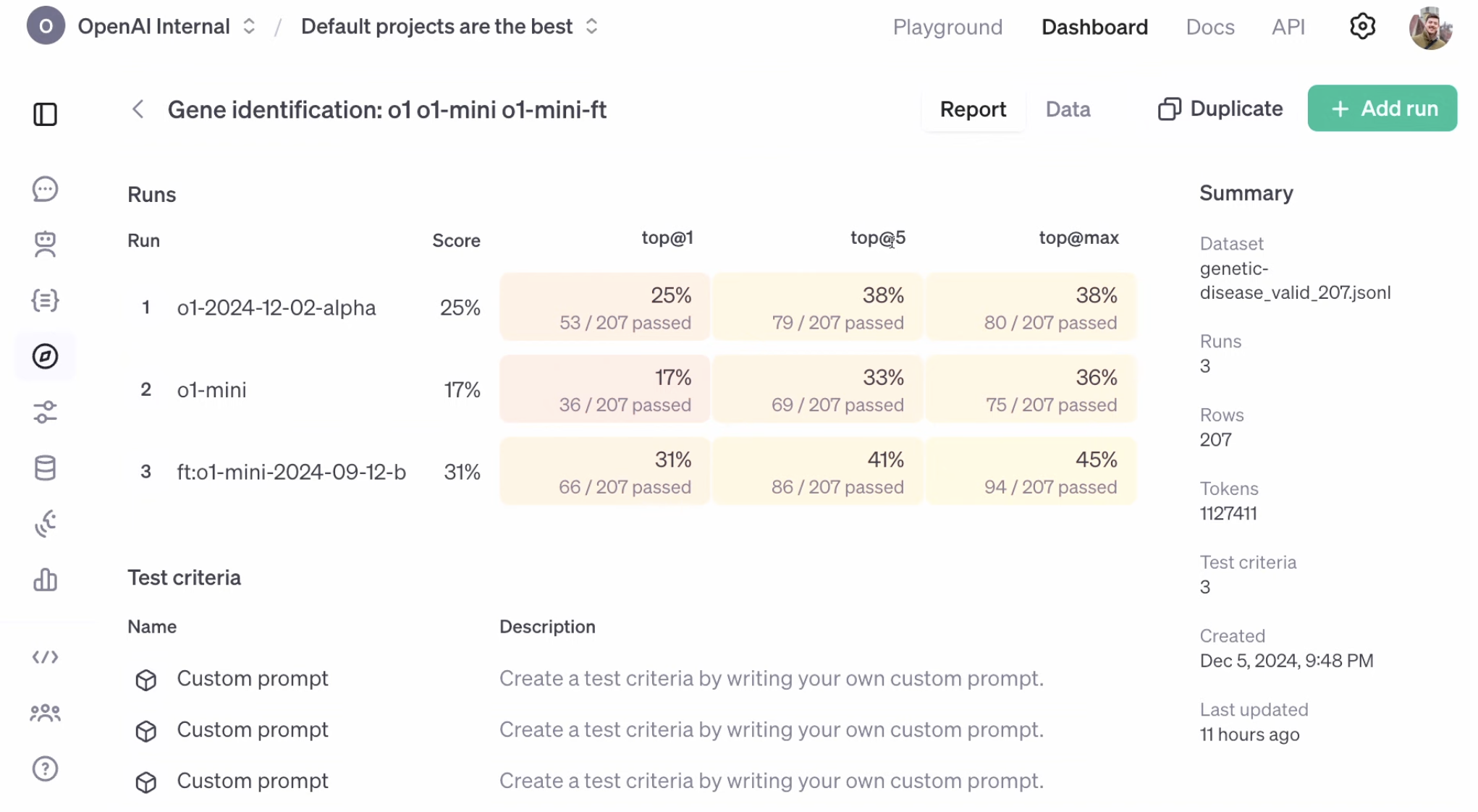

While this is not necessarily consumer-focused, OpenAI welcomed Justin Reese, a researcher for rare genetic diseases at Berkeley University. Now, in a live demo on o1 mini, we’re trying to have the model ID genes from a sample data pool that might be responsible for a disease. Of course, it will be fact-checked against known results, but it’s a good test of reinforcement fine-tuning for validation on o1 mini.

Furthermore, within o1 mini, you can refine and customize this fine-tuning process. The aim is to let you get the most out of the data by tweaking the model to your specific needs. It was noted that depending on the amount of data and the task, it can take anywhere from a few seconds to hours to complete.

The results at the end will be plotted within an evaluation dashboard with several presets.

Day 2 of ’12 Days of OpenAI’ is now kicking off, and Sam Altman is not here. Instead, some other team members are teasing a tool that will arrive next year. It’s not a new model or anything in the realm of Sora, but OpenAI’s customization for models will now support reinforcement fine-tuning.

One of the nice things about Day 1 of OpenAI’s “12 days of OpenAI” was its brevity. When Sam Altman sat down before us with three of his engineers, we wondered it we were in for hours of exposition on major AI updates across every aspect of OpenAI’s business. Turned out that OpenAI was really spreading out all its news over 12 days. Yes, that means we’re in for a lot over the next week or so but at least we can count on each day of news being digestible.

On the other hand, can we get to that Sora update and release today? Please? We’re just 10 minutes from finding out…

Here’s another prediction for “12 Days of OpenAI” announcements: videochatGPT. You might be familiar with the Norad Santa Tracker It’s a fun way to track Santa’s flight around the world on the 24th of December. This year I’m thinking that it’s the perfect time for OpenAI to take this a step further with an AI Santa video call demo as part of a new AI video ChatGPT.

So, imagine talking to ChatGPT just like you’re currently doing in ChatGPT Advanced Voice Mode, but you’re seeing a video avatar talk back to you. Santa Claus would be the perfect video avatar to kick this off, and it would delight children everywhere. Let’s just hope AI Santa doesn’t start hallucinating because the result could be hilarious…

I mentioned Sora earlier, but just in case you haven’t heard of the AI video generator, here’s an unbelievable trailer from OpenAI showing just what it’s capable of.

My personal favorite here is the prompt “a litter of golden retriever puppies playing in the snow. Their heads pop out of the snow.”

How can AI be so cute? I can’t quite wrap my head around it.

ChatGPT uses Dall-E 3 for image generation, which is right up there with the current crop of AI image generators on the market, however, it’s starting to look a little long in the tooth. New upcoming AI image generators like Flux have been slowly getting better and better.

Could we see a new version of Dall-E in our 12 days of OpenAI? It’s a hotly tipped possibility. If OpenAI can give us image generation that’s better than Flux Pro then it will certainly be a happy holiday season for everybody.

OpenAI o1 is now out of preview in ChatGPT.What’s changed since the preview? A faster, more powerful reasoning model that’s better at coding, math & writing.o1 now also supports image uploads, allowing it to apply reasoning to visuals for more detailed & useful responses. pic.twitter.com/hrLiID3MhJDecember 5, 2024

If you’re just joining us, OpenAI announced the official launch of o1 yesterday, with a “faster, more powerful reasoning model that’s better at coding, math & writing.”

If you want to try it out yourself, just head to ChatGPT and choose the o1 model from the dropdown. Give it a try on your math homework, or a coding challenge, you might be surprised by the results.

OpenAI’s next livestream kicks off at 10 am PT / 1 pm ET / 6 pm GMT and you can watch it live directly from OpenAI’s website.

If you go to the “12 days of OpenAI” section of the website right now, you’ll see a gorgeous advent calendar, hinting at the exciting days to come. Just like an advent calendar, some days will be better than others, so I’m very intrigued to see if OpenAI keeps up the momentum or if today isn’t quite as stellar as yesterday.

Bookmark that link too, as you’ll be able to revisit all the highlights from the events even if you miss one of the livestreams. Or, you could keep checking in with TechRadar as we’ll keep you up to date on everything you need to know over the next week or so.

What are TechRadar’s predictions for day 2 of OpenAI’s “12 days of OpenAI” I hear you cry? Well, Sam Altman said that we can expect “some big ones and some stocking stuffers” throughout the 12 days, and considering that yesterday we got a brand new version of ChatGPT (ChatGPT o1) I’d predict that today’s Xmas gift from OpenAI will be more of a “stocking stuffer” than one of the “big ones”.

Perhaps a minor update to ChatGPT search or ChatGPT Advanced Voice Mode. What am I really hoping for? I want ChatGPT search to be rolled out to all users on the free tier. Fingers crossed!

One of the biggest announcements we expect to see over the next week or so is the official launch of Sora, OpenAI’s video generator which can transform a text prompt into an incredible video.

Sora was leaked last month by unhappy artists who have accused OpenAI of taking advantage for unpaid research and development purposes. There’s definitely a debate to be had on how OpenAI trains its AI models, but that’s maybe one for another day.

In terms of what Sora offers, well, imagine one of the best AI image generators, but video. I’ve not tried Sora yet, but from the demos online, it looks pretty awesome.

OpenAI also announced ChatGPT Pro yesterday, but who is it actually aimed at?

For $200/month ChatGPT Pro gives you unlimited usage and an even smarter version of o1 with “more benefits to come!”

The fact is that for almost everybody the current $20 a month ChatGPT Plus option will be easily sufficient. Perhaps it can do special things (like writing “David Mayer” with no problems), but it seems hard to justify beyond a select few users who need massive computing power. To me, the $200 price point seems more like a price anchor. Essentially, it makes the $20 ChatGPT Plus price point look really good value.

Yesterday, OpenAI kicked off the 12-day event with the announcement that the company’s o1 reasoning model would no longer be in preview, ready for everyone to try.

The AI model thrives with scientific equations and math problems with OpenAI saying o1 can solve 83% of the problems in the International Mathematics Olympiad qualifying exam, a massive improvement on GPT-4o, which only scored 13%. The new model makes fewer errors than the preview version, cutting down on major mistakes by 34%.

That wasn’t the only reveal, however…

Welcome to TechRadar’s “12 days of OpenAI” live blog, where our resident AI experts will be taking you through the next 12 (well, 11) days of everything exciting coming out of the world’s most famous AI company.

What will Sam Altman reveal? How will these new updates and products change the way we use artificial intelligence? Who knows, but we’re incredibly excited to find out.