EXO Labs wrote a detailed blog post Executing Llama on Windows 98 And in a short video on social media, it demonstrated a fairly powerful AI large language model (LLM) running on a 26-year-old Windows 98 Pentium II PC. The video shows an ancient Elonex Pentium II @ 350 MHz booting into Windows 98, then EXO firing up a custom pure C inference engine based on Andrej Karpathy’s Llama2.c and asking the LL.M. to generate a story about Sleepy Joe. Surprisingly, it works and the stories are generated very quickly.

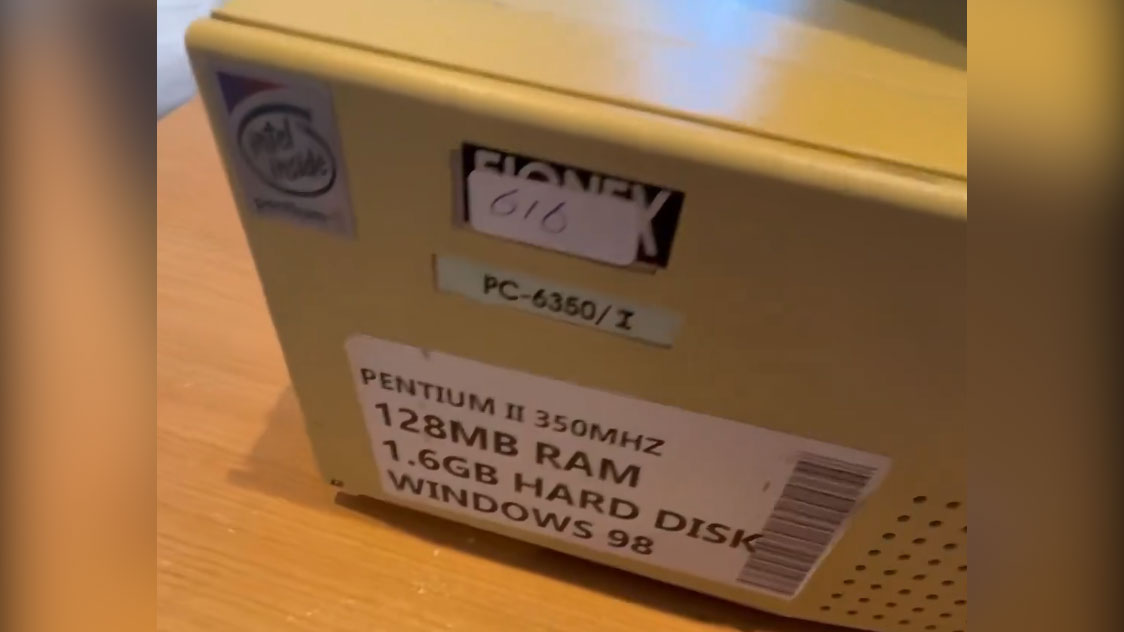

The LLM runs on Windows 98 PC26 hardware with Intel Pentium II CPU and 128MB RAM.December 28, 2024

The above-mentioned eye-opening feat is far from over for EXO Labs. This somewhat mysterious organization suddenly appeared in September, with the mission of “democratizing artificial intelligence.” The organization is made up of researchers and engineers from the University of Oxford. In short, EXO believes that control of artificial intelligence by a few large corporations is a very bad thing for culture, truth, and other fundamentals of our society. Therefore, EXO hopes to “build open infrastructure to train cutting-edge models and enable anyone to run them anywhere.” In this way, ordinary people can hope to train and run artificial intelligence models on almost any device – And this crazy feat of Windows 98 artificial intelligence is a totemic demonstration of what can be done with (severely) limited resources.

Since the tweet video is quite short, we were happy to find EXO’s blog post Executing Llama on Windows 98. This article is published as the fourth day of the “12 Days of EXO” series (so stay tuned).

As readers might expect, it was a trivial matter for EXO to purchase an old Windows 98 PC from eBay as the basis for this project, but there were many hurdles to overcome. EXO explained that transferring data to the old Elonex-branded Pentium II was a challenge, which forced them to use “old school FTP” to transfer files over the old machine’s Ethernet port.

Compiling modern code for Windows 98 can be a bigger challenge. EXO was pleased to find Andrej Karpathy’s llama2.c, which can be summarized as “700 lines of pure C code that can run inference on a model with Llama 2 architecture.” Using this resource and the old Borland C++ 5.02 IDE and compiler (plus some minor tweaks), the code can be made into a Windows 98-compatible executable and run. this is a GitHub link to the completed code.

35.9 tok/sec on Windows 98 🤯 This is a 260K LLM with Llama architecture. The results are in the blog post. https://t.co/QsViEQLqS9 pic.twitter.com/lRpIjERtSrDecember 28, 2024

Alex Cheema, one of the outstanding people behind EXO, specially thanked Andrej Karpathy for providing the code and was amazed by its performance. Using a 260K LLM using the Llama architecture, it achieved “35.9 tok/sec on Windows 98”. Perhaps it is worth emphasizing that Kapati Previously served as Director of AI at Tesla and was a founding team member of OpenAI.

Sure, the 260K LLM is a bit small, but it runs pretty fast on an older 350 MHz single-core PC. According to the EXO blog, upgrading to 15M LLM resulted in generation speeds slightly above 1 tok/second. However, Llama 3.2 1B is very slow at 0.0093 tok/sec.

BitNet is the bigger plan

Now, it’s clear to you that there’s more to this story than just getting LLM running on a Windows 98 computer. EXO rounded out its blog post by talking about the future, which it hopes will be democratized through BitNet.

“BitNet is a transformer architecture using ternary weights,” it explains. Importantly, using this architecture, the 7B parameter model only requires 1.38GB of storage space. That might still make a 26-year-old Pentium II squeak, but it’s a breeze with modern hardware or even equipment from a decade ago.

EXO also emphasizes that BitNet is CPU-first – eschewing expensive GPU requirements. Additionally, such models are claimed to be 50% more efficient than full-precision models and can leverage 100B parameter models at human read speeds (approximately 5 to 7 tok/second) on a single CPU.

Before we leave, please note that EXO is still seeking assistance. If you also want to avoid the future of artificial intelligence being locked into large data centers owned by billionaires and conglomerates and think you can contribute in some way, then you can lend a hand.

For a more casual connection with EXO Labs, they hosted a Discord Retro Channel Discuss running LLM on older hardware (e.g. old Mac, Gameboy, Raspberry Pi, etc.).