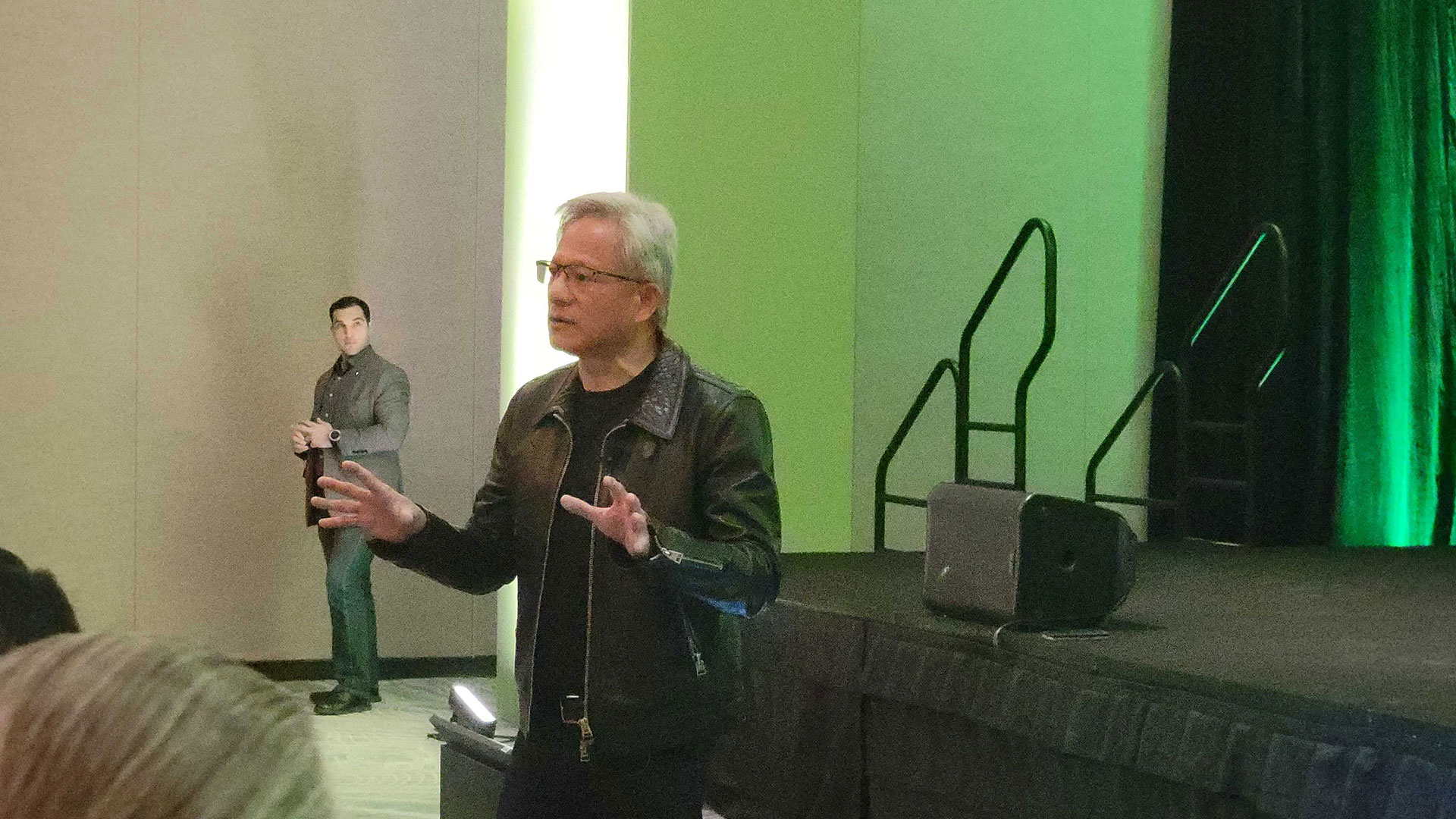

After Blackwell RTX 50 Series Announcement There was some confusion last night. During a live Q&A today, Nvidia CEO Jensen Huang was able to ask for clarification on DLSS 4 and some of the other neural rendering techniques that Nvidia has demonstrated. Because AI is a key element of Blackwell’s architecture, it is important to better understand how it is used and what it means for different use cases.

One of the main performance multipliers of DLSS 4 is the generation of multiple frames. With DLSS 3, Nvidia rendered two frames and then used artificial intelligence to interpolate the intermediate frame. This adds some latency to the game’s rendering pipeline and also causes some framerate issues. At first glance, it looked like DLSS 4 would do something similar, but instead of generating one frame, it would generate two or three frames—in other words, multiple interpolation frames. It turns out that this is wrong.

Update: No, that’s not true. The initial understanding that interpolation is used when generating multiple frames is correct. That’s all we can say for now and hopefully this will clear up any confusion. More detailed information will appear later.

When we asked how DLSS 4’s multi-frame generation works and whether it still works with interpolation, Jensen boldly stated that DLSS 4 “predicts the future” rather than “interpolates the past.” This fundamentally changes how it works, what it requires in terms of hardware capabilities, and what we can expect in terms of latency.

The work is still being done without the input of new users, although Reflex 2’s warp feature can at least mitigate some of this. But based on previously rendered frames, motion vectors and other data, DLSS 4 will generate new frames to ensure smoother playback. It also has new hardware requirements that help it maintain better frame rates.

[We think the misunderstanding or incorrect explanation stems from the overlap between multi frame generation and Reflex 2. There’s some interesting stuff going on with Reflex 2, which does involve prediction of a sort, and that probably got conflated with frame generation.]

We haven’t been able to try this out in person yet, so we can’t say with certainty how DLSS 4 multiframe generation compares to DLSS 3 frame generation and regular rendering. It sounds like there’s still a latency penalty, but how much it will be felt – and especially at different levels of RTX 50-series GPUs – is an important factor.

We know from DLSS 3 that if you only get a generated FPS of 40, for example, it can feel very sluggish and sluggish, even if it looks quite smooth. This is because user input is sampled at 20 frames per second. With DLSS 4, this potentially means you can have a generated frame rate of 80 fps with a custom sampling of 20 fps. Or to put it another way, we generally believed that you needed a sample rate of at least 40-50 frames per second for the game to feel responsive when using Framegen.

When generating multiple frames, this would mean that for a similar experience we could potentially need a generated frame rate of 160–200 fps. This might be great on a 240Hz monitor, and we’d love to see that, but at the same time, generating multiple frames on a 60Hz or even 120Hz monitor might not be that great.

Our other question was about the neural textures and rendering shown. Nvidia showed off some examples of using 48MB of memory for standard materials and reduced it to 16MB with “RTX Neural Materials”. But what exactly does this mean and how will it affect gameplay? We were particularly interested in whether there was potential to support GPUs that don’t have much VRAM, such as the RTX 4060 with 8GB of memory.

Unfortunately, Jensen says these neural materials will require special implementation on the part of content creators. He said Blackwell has new features that allow developers and artists to put shader code mixed with neural rendering instructions into materials. Descriptions of materials have become quite complex and describing them mathematically can be difficult. But Jensen also said that “AI can learn to do this for us.”

Some of these new features may not be available on previous generation GPUs because they do not have the necessary hardware capabilities to mix shader code with neural code. Or perhaps they will work, but won’t be as productive. So to get the full benefit, you’ll need a new 50-series GPU, and Neural Materials will require content work, meaning developers will have to tailor these new features specifically.

This means, firstly, that if we get an RTX 5060 8GB card as an example, for a number of existing games, 8GB of VRAM may still be the limiting factor. This also means the 8GB RTX 4060 and 4060 Ti won’t get new life with neural rendering. Or at least that’s how we interpret things. But perhaps in the future an artificial intelligence network will learn to do some of these things for us.