Support Vector Machines: A Progression of Algorithms

Support for vector machine

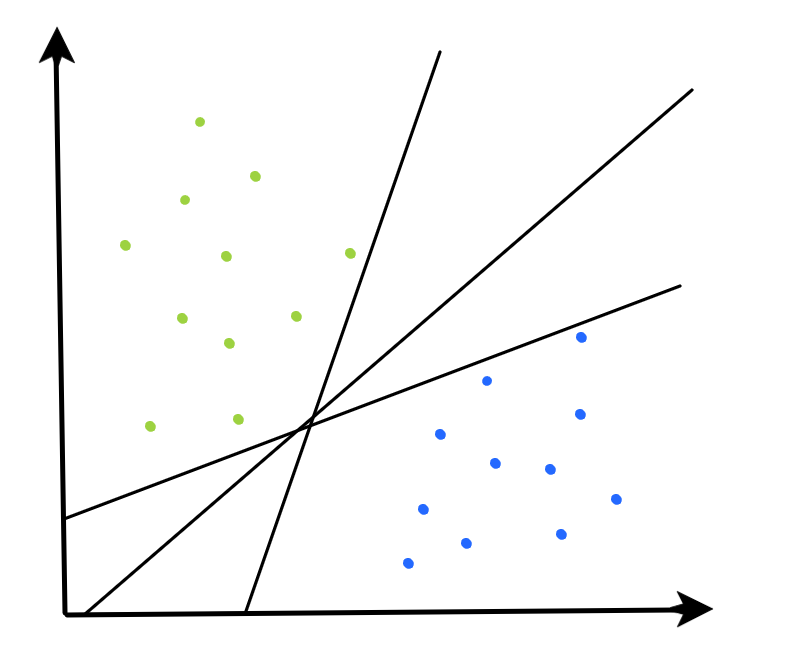

In general, there are two strategies that are usually used when trying to classify nonlinear data:

- Install a nonlinear classification algorithm for data in its initial space.

- Increase the space of functions to a higher measurement, where there is a linear decision -making boundary.

SVM strive to find a linear border of solutions in a higher space, but they do this in a computingly effective method using the functions of the nucleus, which allows them to find this border of solutions without the need to apply a nonlinear transformation to observations.

There are many different options for increasing the space of functions through a certain nonlinear transformation of functions (polynoma of a higher order, the terms of interaction, etc.). Let’s see an example of where we expand the space of functions by applying the quadratic expansion of the polynoma.

Suppose our original set of functions consists of p Features below.

Our new set of functions after the use of quadratic polynomial expansion consists of 2p Features below.

Now we need to solve the following optimization problem.

This is the same as the problem of optimizing the SVC that we saw earlier, but now we have quadratic terms included in our space of functions, so we have twice as many functions. The decision of the aforementioned will be linear in quadratic space, but non -linearly when transferring back into the initial space of functions.

However, to solve the problem higher, this would require the use of quadratic polynomial transformation to each observation that SVC will be approached. This can be computingly expensive with high dimensional data. In addition, for more complex data, the linear decision -making line may not exist even after the application of quadratic expansion. In this case, we must explore other spaces of a higher size before we can find a linear decision -making boundary, where the cost of applying a nonlinear transformation to our data can be very expensive. Ideally, we will be able to find this border of solutions in a higher space without the need to apply the necessary nonlinear transformation to our data.

Fortunately, it turns out that the solution to the SVC optimization problem does not require obvious knowledge about the vectors of signs for observations in our data set. We only need to know how observations are compared with each other in a higher space. In mathematical terms, this means that we just need to calculate paired internal products (chapter 2 here explains this in detail), where the internal product can be considered as any value that quantitatively determines the similarity of two observations.

It turns out that for some functions there are functions (i.e. the functions of the nucleus) that allow us to calculate the internal product of two observations without the need for a clear transformation of these observations into this space of signs. More detailed information for this nucleus magic, and when possible, you can find in Ch. 3 & GLEK 6 here.

Since these nucleus functions allow us to work in higher space, we have freedom to determine the boundaries of solutions that are much more flexible than those created by typical SVC.

Let’s look at the popular nucleus function: the core of the radial basic function (RBF).

The formula is shown above for reference, but for the sake of basic intuition, the details are not important: just think about what is about something, which quantitatively determines how “similar” two observations are in high (endless!) Size space.

Let’s get back to the data that we saw at the end of the SVC section. When we apply the RBF core to the SVM classifier and adjust it to these data, we can create a decision -making boundary that does a lot of better work to distinguish observation classes than SVC.

import matplotlib.pyplot as plt

import numpy as np

from sklearn.datasets import make_circles

from sklearn import svm# create circle within a circle

X, Y = make_circles(n_samples=100, factor=0.3, noise=0.05, random_state=0)

kernel_list = ['linear','rbf']

fignum = 1

for k in kernel_list:

# fit the model

clf = svm.SVC(kernel=k, C=1)

clf.fit(X, Y)

# plot the line, the points, and the nearest vectors to the plane

xx = np.linspace(-2, 2, 8)

yy = np.linspace(-2, 2, 8)

X1, X2 = np.meshgrid(xx, yy)

Z = np.empty(X1.shape)

for (i, j), val in np.ndenumerate(X1):

x1 = val

x2 = X2[i, j]

p = clf.decision_function([[x1, x2]])

Z[i, j] = p[0]

levels = [-1.0, 0.0, 1.0]

linestyles = ["dashed", "solid", "dashed"]

colors = "k"

plt.figure(fignum, figsize=(4,3))

plt.contour(X1, X2, Z, levels, colors=colors, linestyles=linestyles)

plt.scatter(

clf.support_vectors_[:, 0],

clf.support_vectors_[:, 1],

s=80,

facecolors="none",

zorder=10,

edgecolors="k",

cmap=plt.get_cmap("RdBu"),

)

plt.scatter(X[:, 0], X[:, 1], c=Y, cmap=plt.cm.Paired, edgecolor="black", s=20)

# print kernel & corresponding accuracy score

plt.title(f"Kernel = {k}: Accuracy = {clf.score(X, Y)}")

plt.axis("tight")

fignum = fignum + 1

plt.show()

Ultimately, there are many different options for the functions of the nucleus, which ensures greater freedom within which borders of decision -making we can make. This can be very powerful, but it is important to keep in mind to accompany these functions of the nucleus with appropriate regulators in order to reduce the chances of rethinking.