Why Are Cancer Guidelines Stuck in PDFs?

Two people with the same cancer—same type, same stage, same tumor size, same location—walk into different hospitals. This cancer has been studied extensively through clinical trials, case studies, and meta-analyses. Experts around the world have devoted decades to studying and treating this type of cancer. If you brought all these experts together in a room, they would agree on an evidence-based course of treatment (or further testing) for any given patient.

In an ideal world, both patients would receive this optimal treatment at whichever hospital they visit. But the reality of medicine is complex—doctors work under time pressure, have varying training backgrounds, and may not have immediate access to the latest research. This is where clinical guidelines come in. While individual responses to treatment vary, following evidence-based guidelines gives patients the best chance of a positive outcome.

Each year, top oncologists who specialize in specific types of cancer list and outline the different types and conditions they may see in their care.

-

What should I do if I have early-stage breast cancer, have previously undergone a partial mastectomy, and are currently pregnant?

-

What if there is aggressive cancer in the breast ducts and the tumor is between 0.6 and 1 cm long?

For each situation, an expert panel reviews the available clinical evidence and recommends action. This may be ordering certain tests, prescribing treatment, or arranging future checkups to see how things are changing. The group meets regularly to review new evidence and update the guidance. Several groups around the world have drafted different guidelines. Certain treatments are not available in some countries, so guidance applies by region.

The National Comprehensive Cancer Network (NCCN) is a consortium of 32 leading cancer centers in the United States. Each year, they convene a multidisciplinary panel of experts for each cancer type. These groups review the latest research, debate the evidence, and work to reach consensus on the best approaches to screening, diagnosis, treatment, and supportive care. The resulting guidelines—the NCCN Clinical Practice Guidelines in Oncology—are the most comprehensive and widely used standards of cancer care in the world.

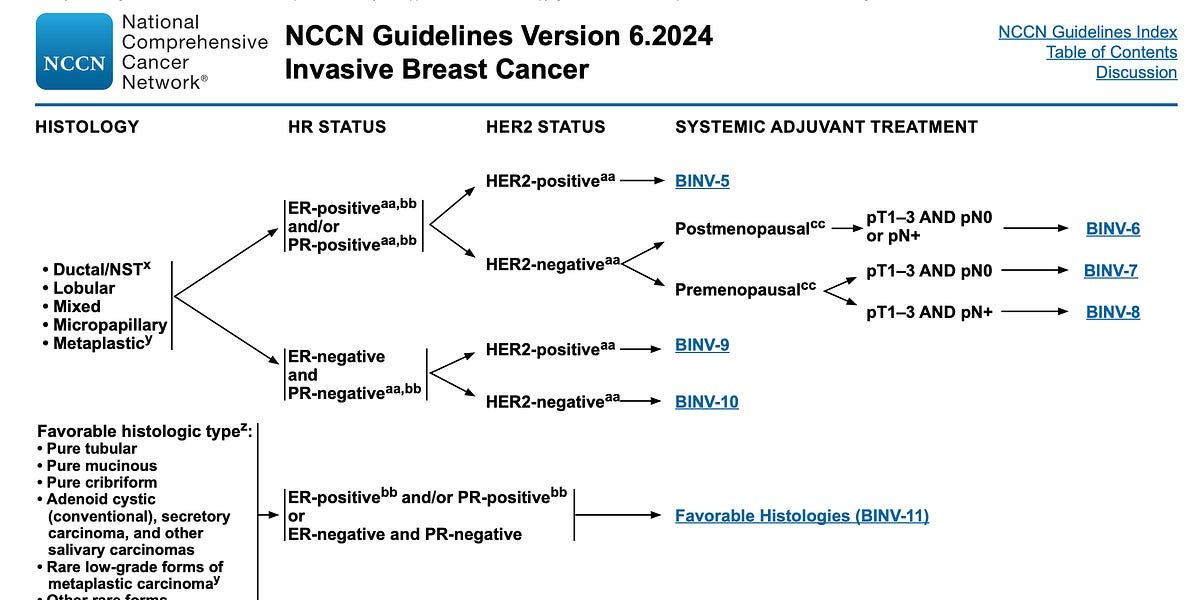

This is a page from the NCCN Breast Cancer Guidelines:

Each hyperlink (like BINV-6) is its own page, with its own flowchart, pointing to more pages. As the doctor trying to explain the guidelines to the patient, your job is to apply the patient’s situation to the flow chart, until you encounter something you don’t know (such as HER2 status), in which case you Order a test to find out. Or you suggest a course of treatment.

It’s not completely standardized. Sometimes there are multiple treatment options, each with a similar level of support. Sometimes, patients have a condition that is relevant to treatment but is not listed in the guidelines. Even if doctors follow guidelines, they still have to make judgment calls. But there may be less room for judgment. Even if another treatment has stronger clinical support, patients have a lower chance of receiving it.

Centers of excellence like the Mayo Clinic and MD Anderson achieve better cancer outcomes for several reasons: They are well-funded, their oncologists are highly specialized, and they have broader access to potentially life-saving clinical test. But another important factor is their systemic approach to care, including robust processes for implementing clinical guidelines. Many of these institutions also play a vital role in developing NCCN guidelines, contributing their expertise and research results.

At any hospital, care can vary. Standards may be ignored. Perhaps the postmenopausal patient received treatment that was more supportive of the premenopausal patient because that treatment was omitted from her chart. Doctors see hundreds of patients, and even when guidance is available, things are inevitably overlooked.

There are many different ways to improve cancer outcomes. You can discover new medicines. You can improve your surgical skills. But one immediate, universal, and achievable way to do this is to close the gap in evidence-based care.

Part of the problem with the guide is its format. The guidelines represent hundreds of thousands of hours of oncology experts’ time. But the end result is a dense and difficult-to-browse PDF.

Imagine an oncologist faced with a rare subtype of breast cancer that they might see every few years. They need to first find the correct PDF version, then determine the correct section based on the characteristics of the cancer, and then follow multiple hyperlinks on different pages while tracking various patient factors – all while managing a heavy patient load. The guide is constantly being revised and new versions are published as separate PDFs. It’s easy for physicians to miss updates or continue to reference outdated versions buried in other clinical documentation files.

At the heart of the rubric is the decision tree. The teams that drafted them spent considerable effort traversing the evidence and gathering it into a decision tree representation. But the guidance documents describing these trees are difficult to follow.

With correctly structured data, machines should be able to interpret these guidelines. Charting systems can automatically recommend diagnostic tests to patients. Alarm bells and “Are you sure?” Patterns may emerge when the course of treatment deviates from the guidelines. When doctors need to review guidance, there should be a faster, more natural way than looking for a PDF.

In addition to downloadable PDFs, organizations drafting guidance should publish the guidance in a structured, machine-interpretable format. Ideally, all guideline organizations agree on the same format and all patient data systems are able to interpret the format. But this data approach is not their specialty.

Recently, I had the opportunity to work with some talented oncologists as a software engineer. It opened a window into the world of cancer care and guidance that I never thought I would see. Even after I moved on to other projects, the problem of these guides being stuck in the PDF still bothered me. I can’t stop thinking about the key decision trees in these dense documents.

So I built a small proof-of-concept tool to see if I could distill the guidance into something more structured that machines could understand. If we can get the guidelines into an appropriate format, we can build better interfaces for doctors to navigate them, and maybe even have computers help follow them.

I first define a schema that can represent most of the information in the NCCN Guidelines. These guides look like information trees. Because some references can cycle, there may be cycles. Therefore, the entire set of guidelines for a certain cancer can be broken down into a number of incoherent directed graphs.

Each point in the graph is represented as a node, whether it is a condition, qualifying characteristic, treatment option, or something else. These nodes also store relevant references and footnotes.

Each arrow or reference from one page to another in a flowchart is represented as a relationship between nodes.

To build the complete guide tree, I did a first check of the file using LL.M., establishing references for each page in the guide. These page references are the parents of the top node on each page. When another node points to a page, it points to that page’s node.

I then use LLM to read the guides in small chunks of the page and extract the guides as a JSON representation of the schema, passing in the page reference:

I ran this extraction on the NCCN Breast Cancer Guidelines and saved the nodes and relationships into the repository. I ended up with a total of 271 nodes.

The next step is to create a graphics viewer. I used the React Flow library and built an interface that displays all root nodes (pages without incoming references). Clicking on a node displays its child nodes, and you can follow a line along a series of nodes.

I wanted to understand how this structured representation could make it easier to go from patient cases to guideline recommendations. I built an agent that can get the patient’s history and iterate through the chart. First, it finds the top node that best matches the patient’s condition. It then looks at the child nodes and selects the node that best matches the patient. It repeats this operation until it reaches a leaf node, or there is not enough information to continue.

You can try the tool yourself here.

The data in the presentation has not been reviewed and contains errors and omissions. Please do not use this for any clinical decision making.

I spent several hours studying the extraction process (less time spent with an agency), and you can get more accurate results with more effort. My accuracy now is about 70-80%. LLM extraction is a simple prototype method that is reliable with human review, but ideally these guidelines are drafted in a structured way from the beginning.

Others are working on this issue. I purposely didn’t look around before starting as that usually kills my motivation to build anything. If you are researching this issue, or following guidance, or building a product that you think this might be useful, please contact us. I wish there was an excuse to spend more time on this and collaborate.

The data is still only semi-structured. Information such as cause and effect and how to evaluate nodes still require natural language understanding of each node. Future work could define a more structured schema that is easier to evaluate.

2024-12-23 23:36:37